Gecko Robotics is a partner to the World Economic Forum (WEF). This article was originally posted on the Forum's Agenda.

Artificial intelligence, like Marc Andreessen famously stated about software over a decade ago, is eating the world. The problem is its diet.

Over the next decade, every industry will be fundamentally altered by AI, from healthcare to transportation, and even physics. It’s also becoming increasingly fashionable to suggest that AI has the potential to solve some of the world’s most pressing problems, including climate change and our struggle to reach net zero. Almost 90% of private and public sector CEOs believe AI is an essential tool to combat climate change. But despite the consensus on AI’s potential to mitigate the worst effects of climate change, we increasingly overlook a critical flaw: the data that makes up its diet.

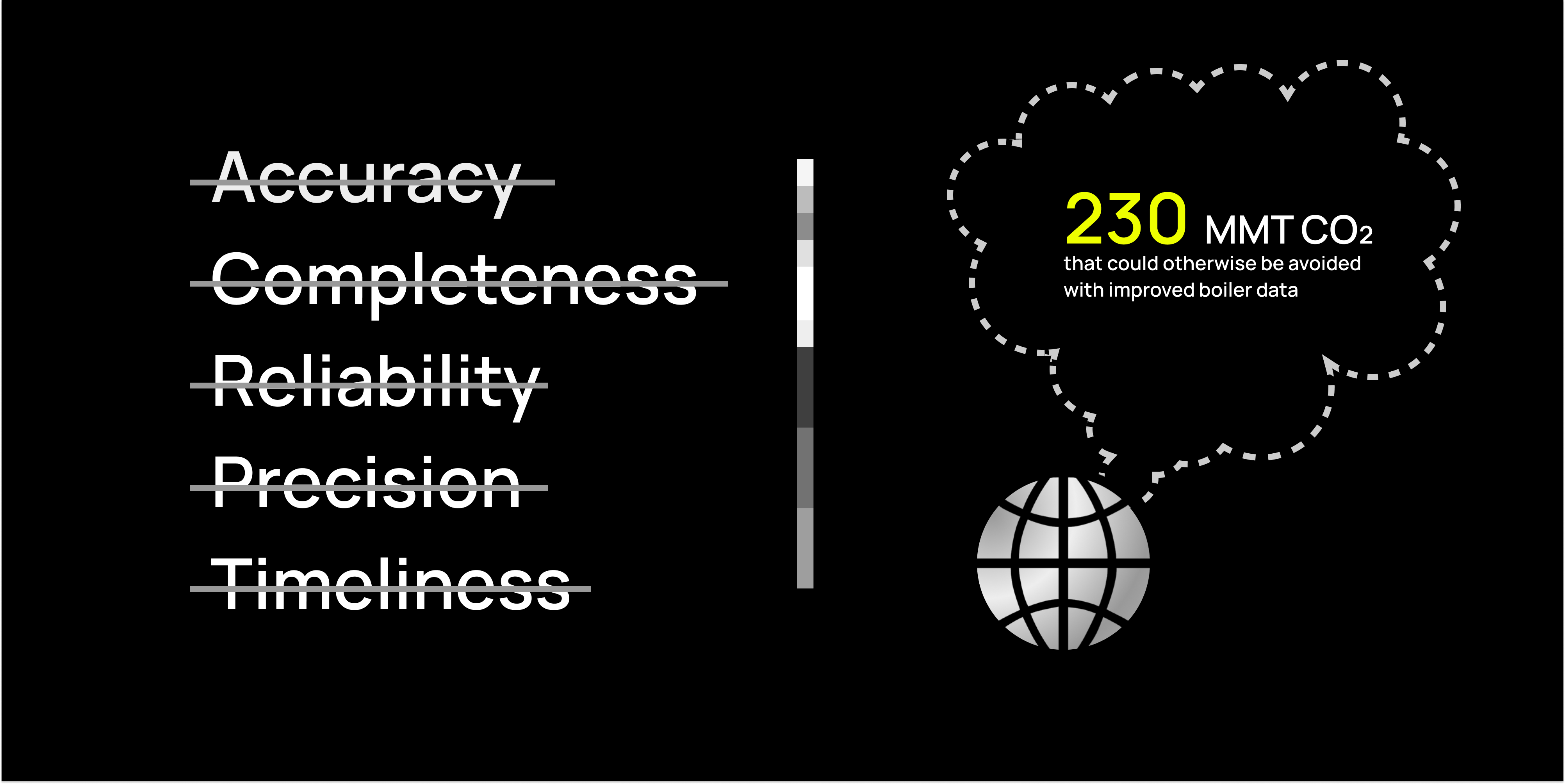

In a recent study, 75% of executives indicated they don’t have a high level of trust in their data. AI models are only as good as the data they are trained on. They rely on robust and granular data sets to identify patterns and trends that allow the models to learn and have predictive power. The powerful analytical capabilities of AI, if applied to flawed data, can lead to unwarranted confidence in bad decisions. This vulnerability poses a significant risk, especially when addressing complex issues like climate change. If the data that is feeding AI lacks accuracy, completeness, reliability, precision and timeliness, the system becomes susceptible to misguided outcomes.

The energy sector, accounting for 80% of emissions in the US and Europe, stands as a prime example of where data quality can make or break climate goals. Achieving a timely and just transition to net zero demands radical data clarity – specifically related to power generation infrastructure and emissions.

The Jetsons approach to data

AI's transformative impact on our daily lives demonstrates its potential for emissions reduction in critical infrastructure. In our personal lives, we rely on data-driven insights for efficient decision-making. For instance, a November 2023 Boston Consulting Group report highlighted how AI-optimized transportation is guiding drivers to the most efficient routes, significantly cutting emissions on a larger scale within our infrastructure.

Similarly, the success of AI-driven thermostat adjustments, as exemplified by Google's Nest thermostats, has cumulatively saved 113 billion kWh of energy, the rough equivalent of double Portugal’s annual electricity use between 2011 and 2022. This shift towards data-informed decision-making provides a compelling model for the broader application of AI, emphasizing the crucial role of accurate data in achieving net-zero emissions in critical infrastructure across sectors.

Recent advances in robotics, sensors, drones and other data-collection technologies give us a chance to collect and analyze data on the built world in ways unimaginable just five years ago. These Jetsons-like innovations offer unprecedented access to ground truth data gathered from the physical world.

Technologies, including robots that crawl the surface of infrastructure armed with sensors like ultrasonics, that produce digital twins with high-fidelity data layers about asset health, allow us to comprehend and address challenges previously obscured. This revolutionary shift can mean the difference between seamless operations and catastrophic failures at places like oil and gas refineries, power plants and manufacturing facilities.

It also means we can feed and train AI to make accurate predictions – optimizing operations while simultaneously improving sustainability metrics. According to the UN, predictive maintenance using AI can reduce downtime in energy production, ultimately reducing the planet’s carbon footprint.

Keeping data on the boil

To pick one example, a recent report published by Rho Impact focused on the environmental impact potential of deploying robotics and AI at scale against a single problem in the power generation industry: boiler tube failures.

The report notes that if we eliminate boiler tube failures inside power plants by pairing robotic inspections with AI-powered software, we could reduce carbon dioxide (CO2) emissions worldwide by as much as 230 million metric tonnes – which is equivalent to 4.8% of US emissions. When boiler tubes fail, power plants shut down, and backup power generation is usually turned on to meet energy demands. Backup power generation is less efficient and leads to higher CO2 emissions per unit of energy generated. Power generated on baseload can be up to 32% more efficient than the same amount of power generated by backup power plants.

It gives me great hope that tangible innovations are already being deployed today, actively driving outcomes that increase our speed to net zero. Adopting technology in pragmatic, responsible ways will lead to significant sustainability gains. As leaders of industries and governments, it is our moral and financial obligation to accelerate investment into these types of results that are right in front of us.

Similar to the most gifted athletes, even the most sophisticated AI systems can’t expect to perform their best without a good diet. This is the problem to solve. Over the next decade, every industry will be fundamentally altered by AI. However, we face the risk of these decisions being made with the assistance of algorithms that have been trained on incomplete or unrepresentative data. We must feed these world-altering technologies first-order data sets. Global initiatives like net zero will rise and fall based on our ability to get this right.